OVAL-Grasp: Open-Vocabulary Affordance Localization for Task-Oriented Grasping

Presented at the 2025 International Symposium on Experimental Robotics (ISER 2025)!

[website]

Robots often struggle with task-oriented grasps in unstructured environments. OVAL-Grasp is a zero-shot approach leveraging large-language models (LLMs) and vision-language models (VLMs) to identify and grasp object parts based on a given task. It uses RGB images and task prompts to segment actionable regions and generate heatmaps for grasping. Evaluated on 20 household objects across 3 tasks, OVAL-Grasp achieved a 95% success rate in part identification and 78.3% in actionable area grasping with the Fetch robot. It also performs well under occlusions and cluttered scenes, showcasing robust task-oriented grasping capabilities.

Language-Guided Object Search in Agricultural Environments

Presented at the 2025 IEEE International Conference on Robotics & Automation (ICRA 2025)!

[arxiv]

To create a more sustainable future, we need to work towards robots that can assist in farms and gardens to reduce the mental and physical workload of farm workers in a time of increasing crop shortages. We tackle the problem of intelligent object retrieval in a farm environment, providing a method that allows a robot to semantically reason about the location of an unseen goal object among a set of previously seen objects in the environment using a Large Language Model (LLM). We leverage object-to-object semantic relationships to best determine the best location to visit in order to most likely find our goal object. We deployed our system on the Boston Dynamics Spot Robot and found an object search success rate of 79%.

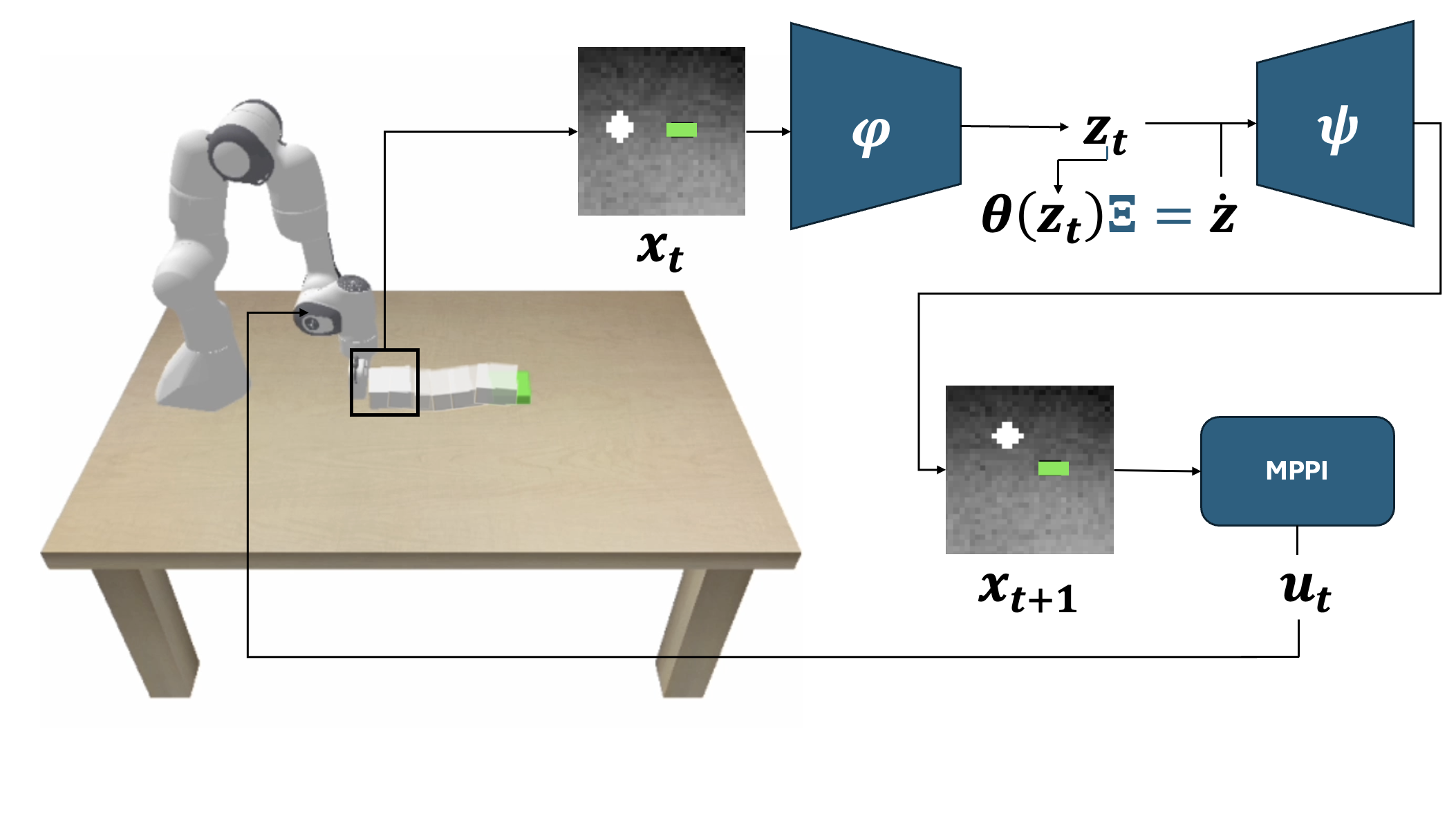

RoboSINDy: Learning Sparse Latent Space Dynamics for Planar Pushing

RoboSINDy learns sparse, interpretable latent dynamics for planar pushing. Using a Franka Panda arm and a two-dimensional latent space, the model drives a block to arbitrary goal poses with high accuracy and smooth trajectories—demonstrating robust, efficient control through learned low-dimensional dynamics.

3D Object Localiztion with Signed Distance Fields (SDFs)

This project presents a robust method for localizing objects in 3D space using Signed Distance Fields (SDFs). SDFs represent an object's surface as a volumetric field where each point's value corresponds to its signed distance from the surface, enabling efficient geometric and normal-based reasoning. Given a point cloud of an object and its corresponding SDF, the approach estimates the object's 6DOF pose (rotation and translation) by minimizing both the distances to the SDF surface and the angle between surface normals derived from the SDF gradients and the point cloud. The method is evaluated on objects from the YCB dataset, demonstrating high accuracy on full and partial point cloud views, even for symmetric and complex geometries.

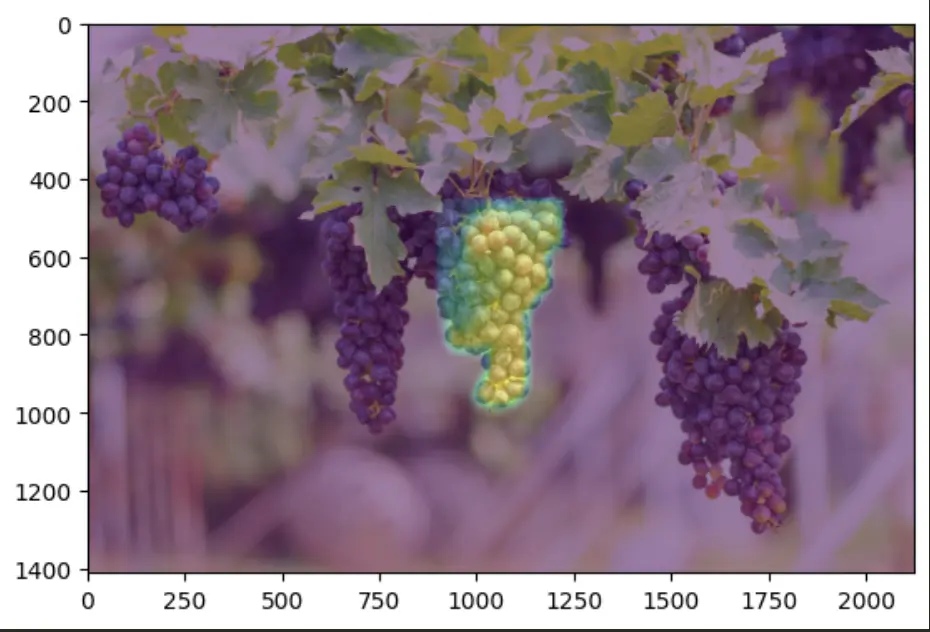

GrapeRob: A Grape Localization Pipeline for Automated Robotic Harvesting

Presented at the 2024 Michigan AI Symposium!

A vision pipeline for grape state estimation to support automated robotic harvesting. This pipeline consists of a grape bunch and stem segmentation model built using PyTorch and the WGISD dataset, and a 3D reconstruction algorithm that combines the grape masks and a depth map to reconstruct a grape point cloud for pose estimation. Read more about it on the project website!

Open-Vocabulary Object Localization with OWL-ViT and FAST-SAM

Since open-vocabulary detectors suffer from noisy outputs when presented with slightly cluttered scenes, we combine the open-vocabulary detector with a probabilistic filter to calculate better object state estmates to use for robotic grasping. In this case, we combined OWL-ViT and FAST Segment-Anything and leveraged fast CUDA computations to calculate multple segmentation masks of an object given just a text prompt, and then combined the estimates using a probabilistic filter, resulting in a highly reliable and accurate object tracking systems that robots can use for open-vocabulary grasping!

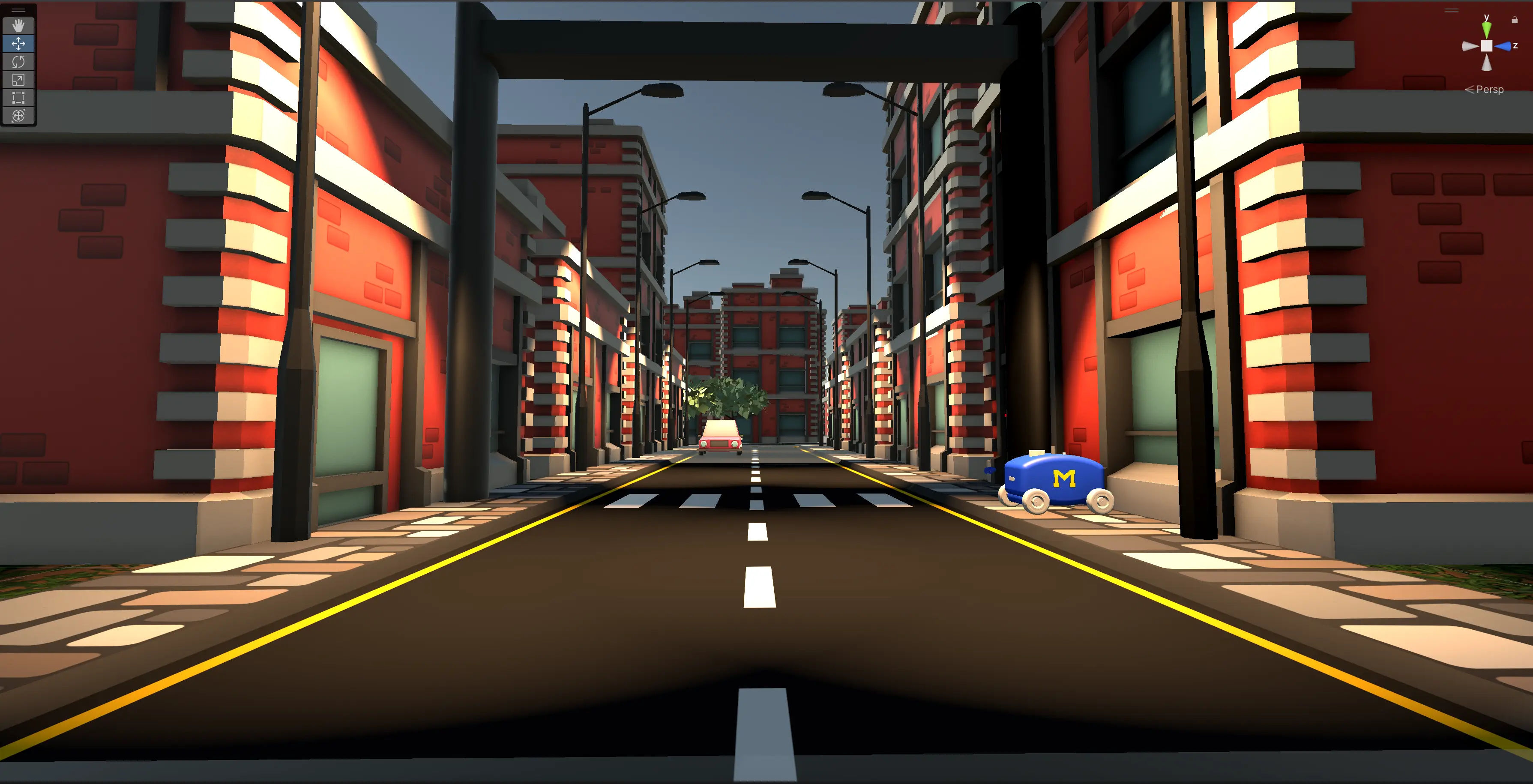

Crosswalk Buddy

Presented at the 2024 Michigan Robotics Undergraduate Research Symposium!

Crosswalk Buddy is an independent research project under UM Robotics with the goal of developing a robot that will increase safety in pedestrian spaces. The aim of this robot is to increase driver visibility of pedestrians in low visibility scenarios. There are two phases - HRI Research and Autonomy Software Development. We developed a simulation of the robot in a city environment to construct human trials to gauge the best proximities and positions of the robot that create a comfortable experience for the pedestrian. Parallely, we have been developing the autonomy stack for a real robot platform. Above, we use YOLOv3 and simple 1D Kalman Filtering to construct a vision system for pedestrian state estimation.